Has the AI-Era come to video games already?

Generated by DALL·E

Generated by DALL·E

With the big noises made by ChatGPT, many different industries have noticed the value of LLM technologies. Unsurprisingly, the video game industry is one of them. In this blog, I introduce several cool demos/WIPs that I’ve recently found, and share my opinions on why they might have profound influences on the future of video game industry. I also try to explain the current difficulties, and possible directions for solving them. In the end, I also share some dreams of future games. I believe, the era of AI has come to video games!

The Matrix AI-Powered NPCs demo by the Replica Studios

Players are used to have chats with the NPCs, but most of these conversations are scripted. The current best conversational experience you can have with NPCs is to select from several possible responses, so you have some freedom of steering dialogues.

If you are a game lover, have you ever dreamt about talking to NPCs like they’re other human players? Well, this is definitely possible now, and the Replica Studios already made a demo about it1. Instead of looping over pre-scripted lines, the Replica Studios attached LMs (probably OpenAI ChatGPT) to the NPCs, allowing them to all speak characteristically. You can even chat with NPCs using your voices directly, and they will speak back. Take a look at this YouTube video2 of the demo.

In many games, the plot is driven (or better put, reflected) by chatting with NPCs. But since LLM chatbots can have randomness in their responses, maybe in the future, the game progression can be take to anywhere, so that every player can have a unique experience in the same game. This is already partly made true in the AI Dungeon text game3.

The Matrix demo may look sleek in the video, but in reality it can take around 10 seconds to get a response from NPCs. This lag is probably due to many users are calling the LLM API at the same time, and slow processing of several different modules, such as ASR and TTS. Besides, current general-purpose LLMs like ChatGPT are very large in terms of number of parameters, and this means long processing time. Potential solutions can be training bespoke, smaller-sized chatbot models, and maybe even audio-to-audio model so that the processing is simplified.

Herika by Dwemer Dynamics

The experience that every player being able to conduct unique conversations with each NPC can already be fascinating. Isn’t it more interesting to have a computer-controlled companion, one that can not only chat with you, but can also follow your voice commands? Then you definitely want to check out Herika, a mod4 for The Elder Scrolls V: Skyrim. Herika is a ChatGPT-powered AI companion that can understand the player’s audio and textual inputs. She is capable of chit-chatting with the player, commenting on the game scenes and events, following the player’s various commands, and more.

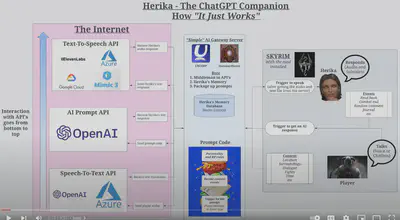

Above is an illustration of Herika’s system design. Here is a brief overview of its main components:

- Audio inputs and outputs are processed to and from texts by ASR and TTS modules

- Game objects, scenes, locations, etc., are extracted from the game as texts

- The chatting and commenting are all achieved by querying the OpenAI API, in the form of role-playing chats

- Given player’s command in natural language, the command-following ability is achieved by asking GPT to generate formatted commands that are used by the game engine to control Herika

Check out this YouTube video5 to get the feeling of how Herika works. Although it seems to work astonishingly well in the video, currently Herika has the same problem of long response time like the Matrix demo. Of course, another issue is that playing with such a companion can burn money quickly, and this is because most of Herika’s functionality is achieved by calling paid APIs. Still a lot of work to do before this kind of gameplay can get popular, but this mod definitely cracks open another line of bright future!

AI playing Tomb Raider

OK, we’ve already seen AI controlling our companion in the game, then what’s next? Controlling the player directly, of course! Here is a video of AI playing Tomb Raider6. In this demo, similar techniques to Herika like LLM and TTS are also used. What’s more, it seems that the author has employed several other AI modules, too, such as object detection. It’s not yet clear how the game character is controlled at the time of writing this blog (08/13/2023).

More work of AI playing games, in academia

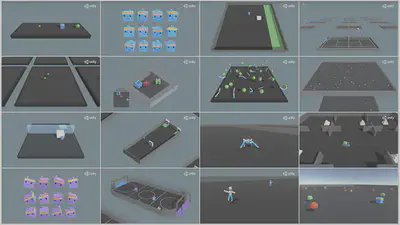

It worths noting that using modern AI7 to play games is not new. Many previous endeavours have already been made, such as the OpenAI Five8 playing Dota 2. Many scientific experiments in the RL field were actually conducted on game environments like OpenAI Gym9 and Unity ML-Agents10. However, the research characteristic of this line of work makes it far from revolutionizing the video game industry, and indeed, this was usually not the indention of researchers.

In the recent months, several other research outcomes related to video games have attracted people’s attention, e.g., Generative Agents11 by Stanford University, and CALM12 by Nvidia. While Generative Agents might have put more focus on studying human behaviour instead of game playing, CALM presents an algorithms of controlling game characters using textual commands (hence easily with voice through ASR). What’s more interesting about CALM is that the model size it uses to control the game character is as small as several hundreds of parameters, making it easily runnable locally. Of course, attaching LMs for more flexible natural language understanding can increase the parameter size many times, but still possible to find a good middle ground between performance and latency.

Outlook

It seems that the technologies for applying modern AI in games are already maturing. Although current AI models, especially those generative ones, are often criticised for problems like hallucination, repetition, and unsafe responses etc., I would argue that such problems will be much less destructive in the game world than in real life. It would be very interesting to see more and more games with AI companions that chat with you, and give you a hand when asked. To make it more exciting, how about train your AI companions by yourselves, while you’re playing the game? You already generate a lot of (labelled) data when you play games, and using it to train your AI companion is theoretically possible. However, popular game engines like Unity and Unreal don’t directly support AI training yet, so we still need some time to make it happen. But games with custom engines is much more flexible, and Human-Like13 is such a game that uses your data generated in the game and trains an AI opponent online.

If tools aren’t a problem, what about model sizes? In the last couple years, we saw best-performing models getting larger and larger, most of which definitely can’t run on consumer machines. I personally believe increasing the model size isn’t the ultimate answer. Luckily, there is another stream of research studying the grokking14 15 phenomenon of models as small as an MLP, with merely several hundreds of parameters. Probably LMs won’t have any sensible performance at this size level, but it’s possible to largely decrease the model sizes once we have enough understanding on their mechanisms.

Taking a step back, not too long ago deep learning and video games were still almost two extremes of the spectrum: the former is often associated with hard-working researchers, while the latter often reminded us of people killing time. Gamers are usually those young and smart people, who devoted large amount of time and energy in the game they love. Since finally the “two extremes” are coming together, maybe something more profound can happen? Just some personal thoughts, probably unrealistic, but for instance using LLMs as portals that make the player more interested in real world, and even learn about practical skills that they can use in real life? It might be possible, who knows?

Updates on 08/20/2023

NVIDIA Omniverse ACE

From ACE’s project page:

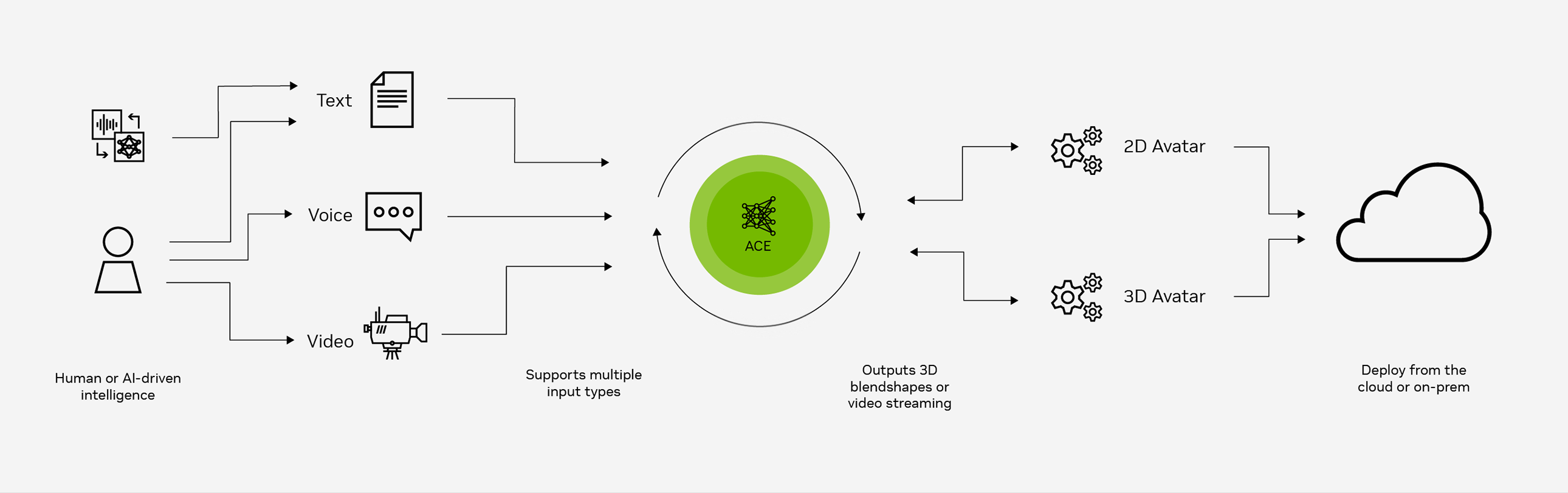

NVIDIA Omniverse™ Avatar Cloud Engine (ACE) is a suite of real-time AI solutions for end-to-end development and deployment of interactive avatars and digital human applications …

In other words, with this toolkit, you can build NPCs that can not only communicate with you in speech and synchronise lip movements, but also supplies backend LLMs. While this was also achieved by combining several independent tools in projects like Herika, NVIDIA ACE provides a one-stop solution.

Below is its system design.

Mantella by Art from the Machine

Mantella16 is a project similar to Replica’s Matrix demo, but in the world of Skyrim like Herika.

AgentSims

AgentSims17 is a work from academia that shares a lot of similarities with Generative Agents, and they were both released around the same time. A main difference of AgentSims from Generative Agents is that AgentSims allows a player to join the town as the mayor and influence the agents by talking with them. They also have an online live demo, which can be found on their website.

-

AI Dungeon: A text-based adventure-story game you direct (and star in) while the AI brings it to life. ↩︎

-

As opposed to traditional AI used in games, which are usually implemented with sets of rules, here by modern AI I mean those powered by DL and/or RL algorithms ↩︎

-

Getting Started With OpenAI Gym: The Basic Building Blocks ↩︎

-

Generative Agents: Interactive Simulacra of Human Behavior ↩︎

-

CALM: Conditional Adversarial Latent Models for Directable Virtual Characters ↩︎

-

Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets ↩︎

-

AgentSims: An Open-Source Sandbox for Large Language Model Evaluation ↩︎